Determining Clock Accuracy Requirements for UART Communications |

Abstract: This application note discusses the timing requirements for the commonly used serial asynchronous communications protocol implemented in UARTs, and shows how to determine the tolerance for the UART clock source at both ends of an asynchronous link. BackgroundThe RS-232 specification dates back to 1962, when it was first released by the EIA (Electronic Industries Association). The specification has changed over time, incorporating higher data rates and closing the compatibility gaps between TIA (Telecommunication Industry Association) and international (ITU, ISO) standards. The current version of the RS-232 specification is EIA/TIA-232-F, dated October 1997.The protocol benefited from the availability of MSI ICs from the late 1970s which had the complexity to handle the standard at reasonable cost. These ICs are generically called UARTs (Universal Asynchronous Receive Transmit). Many LSI ICs (including microcontrollers) now include the functionality. As is often the case, the availability of UARTs drove the industry to use the RS-232 serial protocol in non-RS-232 ways. Common examples are RS-485 transmissions, opto-isolated transmissions, and transmissions using a single-ended physical layer (i.e. 0 - 3.3V instead of ±5V or ±10V). This note covers the general timing aspects of the serial interface, not application nuances of handshaking or the physical layer, and so is applicable to all generalized UART applications.

UART TimingA typical UART frame is shown in Figure 1. It comprises a Start bit, 8 data bits, and a Stop bit. Other variants are also possible in RS-232 applications - the data packet could be 5, 6, or 7 bits long, there could be 2 Stop bits, and a Parity bit could be inserted between the data packet and the Stop bit for rudimentary error detection. Figure 1 shows the signaling as seen at a UART's TXD (Transmit Data) or RXD (Receive Data) pins. RS-232 bus drivers invert as well as level shift, so a logic 1 is a negative voltage on the bus, and a logic 0 is a positive voltage.

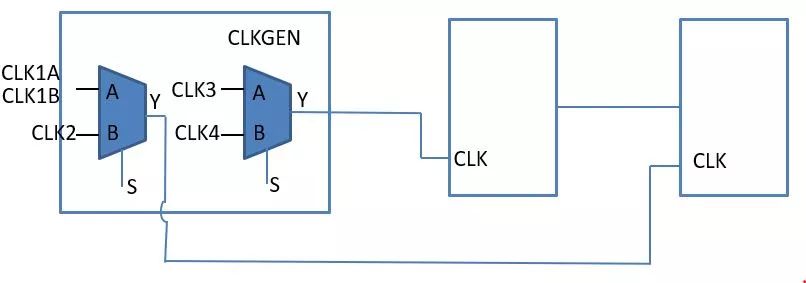

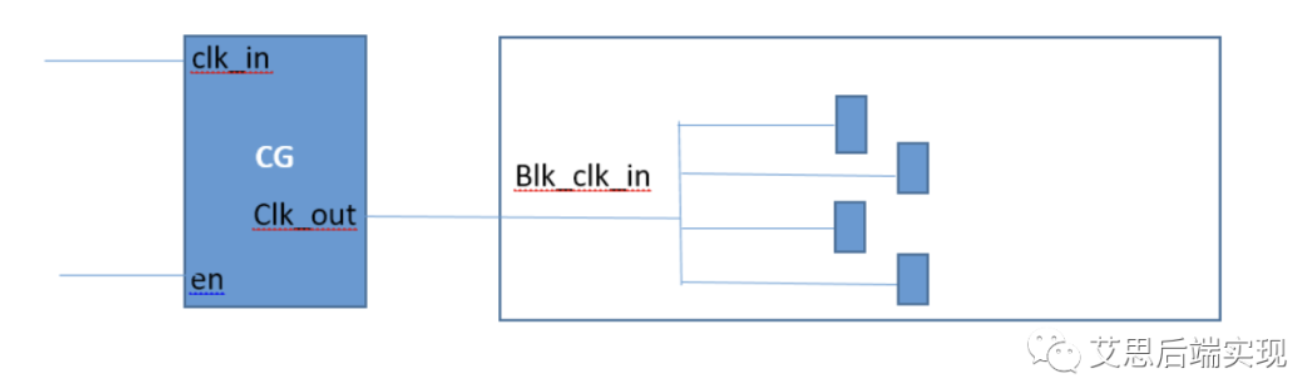

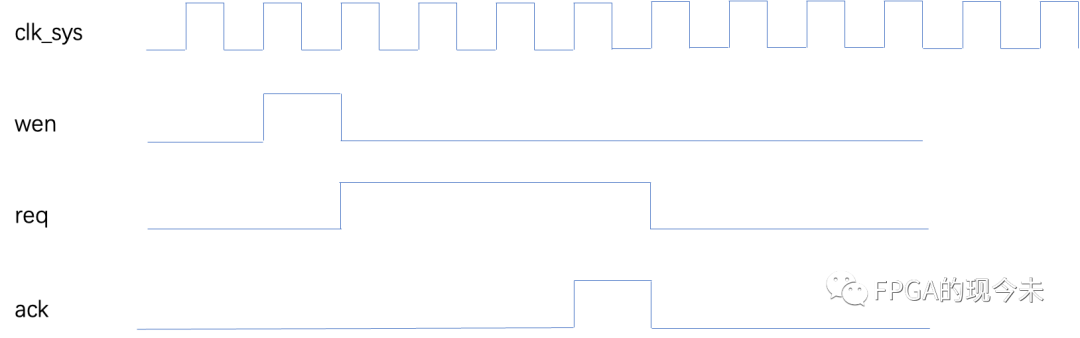

When two UARTs communicate, it is a given that both transmitter and receiver know the signaling speed. The receiver doesn't know when a packet will be sent, with respect to the receiver clock, hence the protocol is termed asynchronous. The receiver circuitry is correspondingly more complex than that of the transmitter. While the transmitter simply has to shuffle out a frame of data at a defined bit rate, the receiver has to recognize the start of the frame to synchronize itself, and therefore determine the best data sampling point for the bitstream. Figure 2 shows a common method used by UART receivers to synchronize to a received frame. The receive UART uses a clock which is 16 times the data rate. A new frame is recognized by the falling edge at the beginning of the active-low Start bit, when the signal changes from the active-high Stop bit or bus idle condition. The receive UART resets its counters on this falling edge, and expects mid Start bit to occur after 8 clock cycles, and the mid point of each subsequent bit to appear every 16 clock cycles thereafter. The Start bit is typically sampled at the mid bit time to check that the level is still low, to ensure that the detected falling edge was a Start bit not a noise spike. Another common improvement is to sample each bit not simply once at the mid bit position (clock count 8 out of 16), but three times (clock counts 7, 8, and 9 out of 16).

Timing AccuracySo the question is: how accurate does the receive UART clock have to be to be sure of receiving data correctly? (Actually, a better question is to ask how far different the transmit and receive UART clocks can be, since the absolute clock rate is unimportant for the purposes of accurate reception. More on this later.) To answer this, the first point to understand is that because the UART receiver synchronizes itself to the start of each and every frame, we only care about accurate data sampling during one frame. There isn't any buildup of error beyond a frame's Stop bit, which simplifies analysis because we only have to consider one frame as the worst case.When do we get a timing error due to transmit-receive clock mismatch? Well, we are attempting to sample each bit at the mid point (Figure 2). If we sample a bit ? a bit period too early or too late, we will sample at the bit transition and have problems (Figure 3).

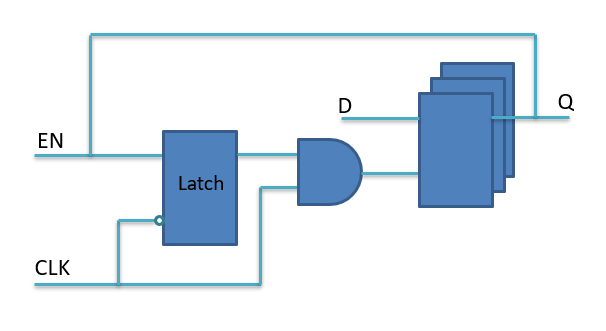

In reality, we can't (reliably) sample close to the bit transition point. The dominant reason for this is the finite (and typically slow) transmission rise and fall times, made even slower if overly capacitive cabling is used. A long bus incurs high attenuation, reducing noise margin, making it more important to sample when the bit level has settled. It is difficult to assess a worst case acceptable sampling range across a bit's period in a quantitive manner. EIA/TIA-232-F does specify a 4% of bit period maximum slew time for a transmission, but this is difficult to achieve for long runs at 192k/bits-1. But let us define two data path scenarios for the purpose of this note. Consider a 'nasty' scenario which can only be sampled reliably within the middle 50% of the bit time (Figure 4). This could equate to a long, capacitive RS-232 run. The 'normal' scenario can be sampled within the middle 75% of the bit time (Figure 5), equating to a relatively benign bus (such as a metre or two run with buffered CMOS logic levels or an RS-485 differential pair) within an equipment chassis.

For Figures 4 and 5 we can determine that the error budget is ±25% and ±37.5% from the optimal bit-centre sampling point for the nasty and normal scenarios respectively. This error is equivalent to ±4 or ±6 periods of the x16 UART receive clock. Another error to include in this budget is the synchronization error when the falling edge of the Start bit is detected. The UART will most likely be designed to start on the next rising edge of its 16x clock after Start bit detection. Since the 16x clock and the received data stream are asynchronous, the falling edge of the Start bit could occur just after a 16x clock rising edge, or just before one but not with enough setup time to use it. This means that the UART has a ±1 bit error built in at the synchronization point. So our error budget reduces from ±4 or ±6 clock periods to ±3 or ±5 periods. We will presume that short-term clock errors (essentially, jitter) are very small, and therefore we are only considering mid-term and long-term errors. These errors boil down to mismatch in the transmit UART and receive UART timing that is consistent during a frame. Since the timing is synchronized at the falling edge of the Start bit, the worst case timing error will be at the last data sampling point, which is the Stop bit. The optimum sampling point for the Stop bit is are its bit-centre, which is (16 x 9.5) = 152 UART receive clocks after the original falling edge of the Start bit. Now we can calculate our allowable error as a percentage. For the normal scenario, the clock mismatch error can be ±5/152 = ±3.3%, and for the nasty scenario it can be ±3/152 = ±2%. As hinted earlier, although the problem will materialize at the receive end of the link, clock mismatch is actually a tolerance issue shared between the transmit and receive UARTs. So presuming that both UARTs are attempting to communicate at exactly the same bit rate (baud), then the allowable error can be shared, in any proportion, between the two UARTs. Making use of the allowable error budget is helped in systems where both ends of the link are being designed at the same time, partly because the tolerance of both ends will be known, and partly because tradeoffs and cost saving can be made. In general a standard, low cost, ceramic resonator with ±0.5% accuracy and a further ±0.5% drift over temperature and life can be used for the clock source at both ends of the link. This meets the 2% nasty scenario discussed earlier. If the system uses a master controller (typically a microcontroller or a PC) which uses a standard 100ppm crystal oscillator for the UART clock source, then the link error just about halves. Be careful with microcontrollers that synthesize baud frequencies for their internal UARTs. Depending on the choice of microcontroller clock, the baud rates may not be exact. If the error can be determined, it can be easily included in the link error budget. 1. It may appear odd that the Stop bit is sampled, but it is. If the Stop bit is detected as a low level instead of the expected high level, UARTs typically report a Frame Error. |

電子發(fā)燒友App

電子發(fā)燒友App

評論